How we got rid of the database–part 3

In my last two posts (part 1 and part 2) I described how a command that is sent from the client is handled by an aggregate of the domain model. I also discussed how the aggregate, when executing the command, raises an event which is then stored in the event store and furthermore published asynchronously by the infrastructure.

In this post I want to show how these published events can be used by observers (or projection generators) to create the read model.

Querying

When the user navigates to a screen the client sends a query to the read model. The query handler in the read model collects the requested data from the projections that make up the read model and returns this data to the client. The client never sends queries to the domain. The domain model is not designed to accept queries and return data. The domain model is optimized for write operations exclusively.

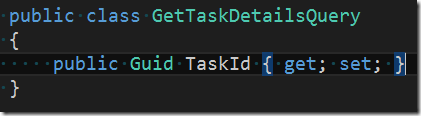

Assuming the user is navigating to the screen where existing tasks can be edited the query that the client triggers could be

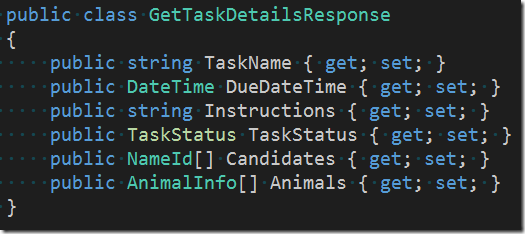

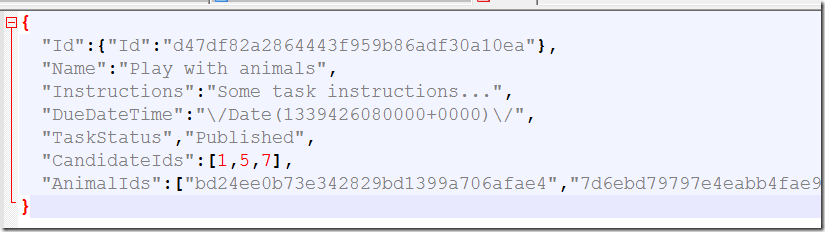

and the data returned by the query handler would look like this

where

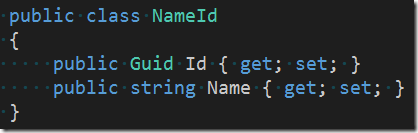

contains the full name and id of the candidates that are assigned to this task and

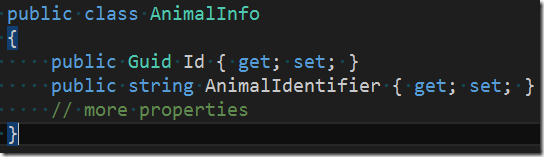

contains all the details of an animal that is target of the task.

Where does this data come from I hear you ask… Well, that’s the topic I’ll discuss next.

Generating the read model

Whenever we design a new screen we need data to display on this screen. Thus we define projection(s) that are tailored in a way that best suits our needs. Ideally we want to create a projection that allows us to get all the data we want with one single read operation to the data store. That is the ideal, but in reality that is not always possible and thus we just want to state this principle: design the projections in such a way that we can retrieve the data needed for a screen using the minimal amount of read operations, ideally one operation only.

This principle requires as a consequence that we store our data in a highly de-normalized way. We regard data duplication in the read model as a necessary consequence and do not try to avoid it. Storage space is extremely cheap nowadays.

Since we are not using an RDBMS to store our read model the projections do not have to be “flat”. We can projections that are made of object graphs.

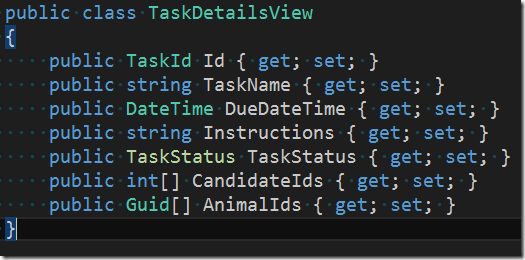

A first approach would thus consequently be to define a projection that looks somewhat similar to the query response object, that we define above. Let’s do so

Please note that I use the typed Id (TaskId) introduced in part 1 in my view.

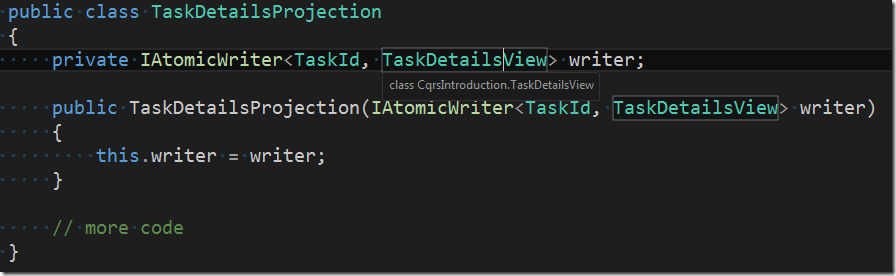

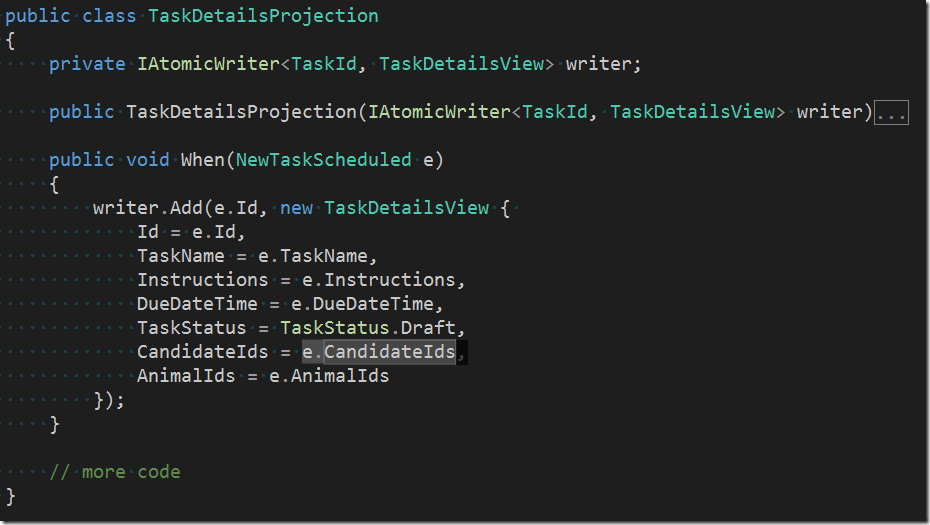

Now we need to define a class, that creates the task details projection for us. We want to make the implementation of this class as simple as possible. The class should be a POCO and only depend on a writer object

The writer that we inject into our projection generator class is responsible to physically write our views into the data store. In our case the data store will be the file system but it could be as well a table in an RDBMS or a document database or a Lucene index. From the perspective of this generator class it doesn’t really matter what type of data store it is.

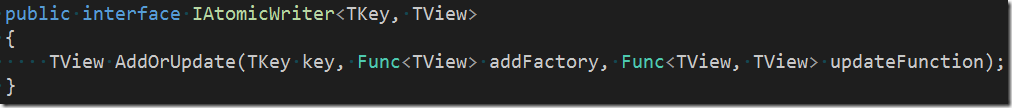

The definition of the IAtomicWriter interface is very simple

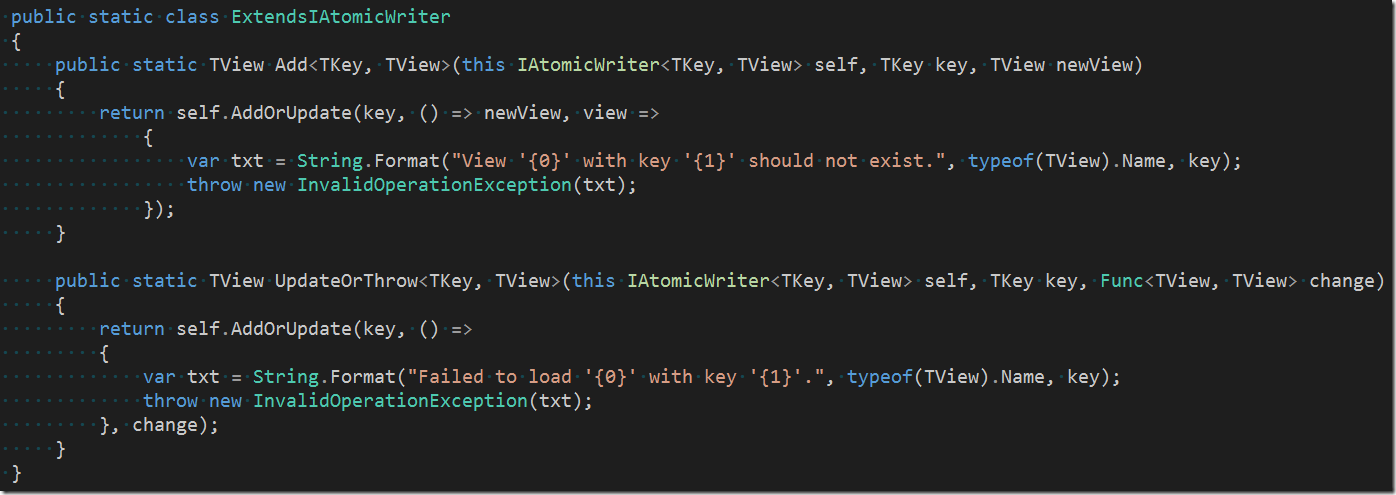

For convenience we can then write some extension methods to this interface

Why not add these methods to the interface directly instead of writing extension methods you might ask. The reason is that we should always try to keep our interfaces as simple as possible such as that our code remains more composable and less coupled.

Having defined the interface and also added the above extension methods we can now continue to implement our projection generator class.

Each projection is created by events. All data that make up a projection are provided by events. In our sample the first event in the life cycle of a task is the NewTaskScheduled event that we defined in part 1. Let’s add code to handle this event in our projection generator

We use the same convention as we already introduced with the aggregate: we call all our methods When. Each of those methods has exactly one parameter, the event that it handles. This convention makes it easier for us to later on write some tools around our read model. I will discuss this in detail in a later post.

Note that we have used the Add (extension-) method of the writer since at the time when the NewTaskScheduled event happens the corresponding view does not yet exists.

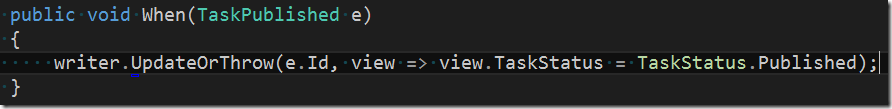

Later on other events of interest might be published by the domain and our projection generator can listen to them. Let’s take as a sample the TaskPublished event. We add the following code to the projection generator

very simple, isn’t it?

The resulting file on the file system could look similar to this

(remember that we are using JSON serialization).

In the next post I’ll try to wire up all pieces that we have defined so far such as that we can run a little end-to-end demo. Stay tuned…