- Downtime

- Database failures

- Hackers, and hack bots from all over the Internet

- Slowness

- Scaling issues

- Expensive

- WP Forum: Why Absolute URLs, it’s so stupid

- WP Forum: Please remove absolute URls from WordPress

- 99.99% Uptime

- $0.01/month Hosting Cost (based on 100MB of data)

- Fast response times

- Scales automatically with traffic

- Built to sustain the loss of two concurrent data centers

- Hackers now have to hack Amazon (good luck with that)

- What types of technical debt can you afford to accumulate?

- Where in your codebase can you afford to accumulate technical debt?

- When do you need to make a payment on your technical debt (when to refactor)?

- User experience can suffer from missing functionality.

- Overall utility of your product or service may be compromised.

- Future development will be more costly, if the feature must be added later.

- Possible increased risk of catastrophe (system failure, security compromise, data corruption, etc.) Sometimes code can cause serious damage to people and systems.

- User experience may suffer as users run into bugs that inhibit their ability to use your application.

- Utility of your application can decrease significantly.

- Plan on adding the broken functionality to your bug list, so future development will suffer.

- Certain solutions may not scale with traffic based on their initial design or could be cost prohibitive to effectively scale in the future.

- Logical complexities, for instance in the case of a poorly designed database, can ripple through the entire application. Making future development slow to a crawl.

- Decreased utility as your users struggle to fully take advantage of the application.

- Very complex refactorings, since user interface inherently tend to be highly coupled, so again more possible drag on future development.

- Maintaining the quality of an application is not possible if you can’t answer the “does it work?” question effectively and efficiently.

- Future development can be more difficult without a way to tell when functionality stops working or when new features break old ones.

- Future development can become much more difficult, if not impossible (rewriting working code is common if the readability is bad enough)

- Risk of being highly dependent on the original developer/team for information about the system (see the bus factor)

- Loss of the ability to understand your entire application, what it does and how it works.

- mp4

- webm

- ogg

- vp8

- h.264

- theora

- Strange type coercision and overloading of

+ - Strange results for equality testing

==or===,!=and!== - Prototypal inheritance vs. class based object orientation

- Noisy syntax, lots of curly braces, function keywords, and semicolons

- Global variables are as easy a missing a

varstatement - Lack of any sort of design aesthetic

- Lots of bad javascript progammers (think graphic designers)

- Lots of bad javascript code (think code that a graphic designer would write)

- Hundreds of implementations for every browser, version and other javascript dependent platform (and yes, some of them are buggy)

- Awesomely dynamic (basically you can do anything you want, type safety and common sense be damned)

- Top-notch functional programming

- Largest community of developers

- Largest install base on the planet

- No corporate ownership of the standard

- Sophisticated javascript runtimes (most modern browsers, node.js etc)

- Added debugging complexity

- Added compile complexity

- Smaller development community

Heartbleed Hotel: The biggest Internet f*ckup of all time

The heartbleed bug is the single biggest f*ckup in the history of the Internet.

For anyone that doubts the veracity of this claim let me state the plain and simple facts:

Since December of 2011 any individual with an Internet connection could read the memory of maybe half of the “secure” Web servers on the Internet.

For the layman, reading the memory of a Web server often means being able to read usernames, passwords, credit card numbers and basically anything else that the server is doing.

Shocking.

For over two years we’ve all had our pants down and no one even noticed.

Open Source Failed

For most developers, myself included, open source has been a mythical paradigm shift in software development that should be embraced without question.

What of bugs? There’s a famous adage about bugs in open source:

Given enough eyeballs, all bugs are shallow

Applied to this case, the buffer overflow in the hearbeat protocol should have been easily found by someone. But it wasn’t.

After the heartbleed bug, can this statement of open source fact still be considered valid? What does it mean to have eyes on code.

If I wanted to look at the code for OpenSSL, I could have. Guess what? I never did, and I would bet that most of the people reading this article didn’t either.

The Ultimate Humiliation for Public Key Infrastructure

It’s long been known that our current public key infrastructure was horribly broken. Mostly, proven by numerous compromises of the supposed “sources of trust”, the certificate authorities.

Broken and run roughshod over by the likes of the NSA, hackers and other government agencies over the globe.

This latest episode is really just icing on the cake. Not only are the private keys of “secured” servers up for grabs, but so is their most sensitive and personal data.

I think it’s time we as an industry take a good hard look in the mirror, and reevaluate… everything.

Frontend & Backend: Gotta Keep’em Separated

I used to start my web applications by throwing all of my code into one big project. So I’d have html, css, javascript and all the backend code together in one monolithic directory.

Now, I take a different, smarter approach. I separate the frontend from the backend. Separate code, separate projects. Why?

Different Skillz

One downside to having your entire project tucked neatly into one of today’s monster frameworks like Rails, Django, or MVC, is that it can be very difficult for a frontend developer to work on the project.

While it might be simple for a seasoned Ruby dev to setup rvm, gem install all of the ruby dependencies, deal with native extensions and cross platform issues. These things are probably not what your frontend developer is best suited for.

In most cases, your typical frontend and backend developer are very different. A frontend developer needs to have more focus on the user experience and design, whereas a backend needs to be more focused on architecture and performance.

Or to put it another way. Your frontend developer is probably an uber-hipster who would keel over and die without his mac and latte, while your backend developer is probably more like one of Richard Stallman’s original neckbeard disciples.

A better architecture

What if the entire backend of your project is simply an API? That sure makes things easier and simpler on your backend developers. Now, they don’t have to worry at all about html, css, or javascript.

It’s also much simpler on the frontend developers, since they can start their work without having to worry about “bugs in the build system” or other server side problems.

It also promotes making the frontend a real first class application and ensuring that it’s truly robust. Hopefully, the frontend developer is now encouraged to code for the inevitable scenario when the backend goes down.

What a better user experience to say, “Hey, we’re having some issues with the server right now, try back later” or even better “The search service appears to be having issues at the moment, but you can still view your profile and current projects.”

In general, I think the separation approach also promotes the use of realtime single page applications on the frontend. In my opinion, this offer the best user experience and design architecture for modern web applications.

Taming Callback Hell in Node.js

One of the first things that you’ll hear about Node.js is that it’s async and it uses callbacks everywhere. In some ways this makes Node.js more complex than your typical runtime, and in some ways it makes it simpler.

When executing long sequences of async steps in series, it can be easy to generate code that looks like this:

// callback hell

exports.processJob = function(options, next) {

db.getUser(options.userId, function(err, user) {

if (error) return next(err);

db.updateAccount(user.accountId, options.total, function(err) {

if (err) return next(err);

http.post(options.url, function(err) {

if (err) return next(err);

next();

});

});

});

};

This is known as callback hell. And while there is nothing wrong with the code itself from a functional perspective, the readability of the code has gone to hell.

One problem here, is that as you add more steps, you will see your code fly off the right of the screen (as each callback will add an additional nesting/indentation level). This can be unnerving for us developers since we tend to read code better vertically than horizontally.

Luckily Node.js code is simply javascript, which means that you actually have a lot of flexibility in how you structure your code. You can make the snippet above much more readable by shuffling some functions around.

Personally, I’m partial to a little Node.js library called async, so I’ll show you how I use one of their constructs async.series to make the above snippet more readable.

exports.processJob = function(options, next) {

var context = {};

async.series({

getUser: function(next) {

db.getUser(options.userId, function(error) {

if (error) return next(error);

context.user = user;

next();

});

},

updateAcount: function(next) {

var accountId = context.user.accountId;

db.updateAccount(accountId, options.amount, next);

},

postToServer: function(next) {

http.post(options.url, next);

}

}, next);

};

What did we just do?

The async.series function can take either an array or an object. The nice thing about handing it an object is that it gives you the opportunity to do some lightweight documentation of the steps by giving the keys of the objects names, like getUser, updateAccount, and postToServer.

Also, notice how we solved another problem, which is sharing state between the various steps by using a variable called “context” that we defined in an outer scope.

One cool thing about Node.js that async shows off quite nicely is that we can easily change these steps to occur in parallel using the same coding paradigm. Just change from async.series to async.parallel.

The asynchronous archtiecture of Node.js can help make applications more scalable and performant, but sometimes you have to do a little extra to keep things manageable from a coding perspective.

</link>

Why WordPress Sucks, and what you can do about it

While great as a CMS, wordpress really sucks as your forward facing production environment. After one week in production with our redesigned wordpress site, we had already racked up 8 hours of downtime due to database failures.

Also, we’re paying a hefty $43 bucks per month to host the Apache/PHP/Mysql monstrosity on Amazon EC2.

When I play the word association game with WordPress these are the things that come to mind:

Also, WordPress has made some very bizarre design decisions around absolute URLs that I would describe as “dumb”. Here are some actual forum topics on the subject.

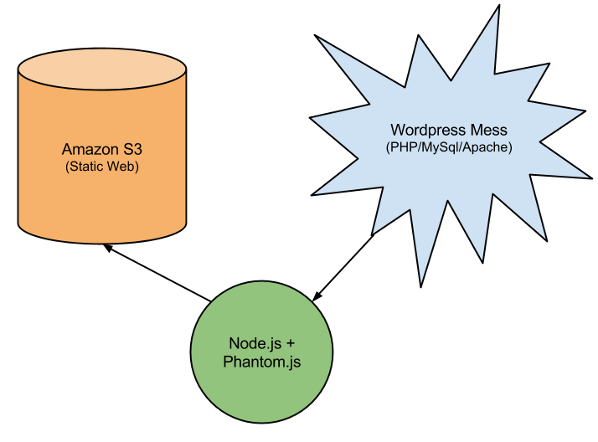

How I fixed WordPress

The only thing that can fix bad technology is good technology, so I came up with a solution that involved using Amazon S3 and Node.js/Phantom.js to build a rock solid, high performance frontend for super cheap.

By using Phantom.js and Node.js, I was able to crawl the entire site, and pull every single resource from wordpress and the pipe it over to S3 for Static Web Hosting.

If you’re looking for a tutorial on static web hosting, read this excellent post by Chad Thompson.

Life on Amazon S3 is Nice

So now, my production environment on Amazon S3 has these wonderful qualities:

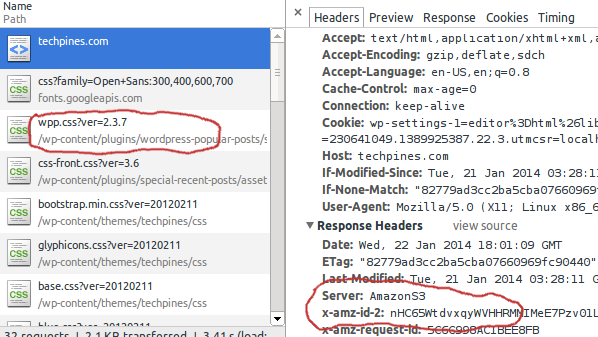

If you go to the new site, and open up a javascript console, you should see this:

Notice, it’s all the familiar URLs from wordpress, /wp-content and /wp-content/themes, but all coming from Amazon’s rock solid S3 service.

As an added bonus, I have the option of doing additional processing of resources as they are piped, so I could compact and minify JS and CSS resources, or create 2X size images for retina displays or any other kind of processing without ever touching the backend of the wordpress site. Pretty cool.

Side Note: If you’ve got a WordPress site, and you’re interested in hearing more about this, please feel free to contact me.

When is it a Good Idea to write Bad Code?

Imagine an asteroid is barreling towards earth and the head of NASA tells you and your development team that they have 12 months to code the guidance system for the missile that will knock the asteroid off it’s earthbound trajectory.

Your first thought may be, “Sh$@*t!” But let’s say you agree. What does the quality of your code look like after 12 months? Is it perfect?

Now imagine an asteroid is barreling towards earth and you have only 1 month to code the guidance system. Now, imagine a worse scenario, you only have 24 hours to code the guidance system or the earth is a goner.

What is Technical Debt?

You should read Martin Folwer’s excellent introduction to technical debt. Technical debt could be defined as the difference between the application that you would like to build and the application that you actually build.

All software projects with limited resources will accumulate technical debt.

Technical debt is often viewed as a negative or pejorative term. However, in reality, technical debt can be leveraged to actually launch successful applications and features. The key to leveraging technical debt is to understand:

Application development typically involves a variety of groups and individuals, for example the development team, business stakeholders, and your end users. To understand technical debt, it’s important to realize the following:

Technical debts are always paid by someone.

Types of Technical Debt

To understand technical debt, it is important to understand the different types of technical debt that can accumulate in an application.

Missing Functionality

Missing functionality can make the user experience of your application more awkward to use, but on the up side it doesn’t pollute the codebase. Chopping features can be a useful technique for reaching deadlines and launching your application.

Tradeoffs

Broken Functionality

Broken functionality refers to code that “straight up” does not work under some or all circumstances.

Tradeoffs

Bad Architecture Design

Systems benefit from being loosely coupled, and highly independent. Conversely, highly coupled systems with lots of dependencies or interdependencies can be highly erratic and difficult to maintain and upgrade. They can also be very difficult to reason about.

Bad architecture decisions can be some of the most expensive to fix, as architecture is the core underpinning of your application.

Tradeoffs

Bad User Experience Design

If you’re users don’t know how to user your application or make mistakes while using your application because of an overly complex user interface, than it will surely be your user base that is paying down this form technical debt.

Bad user experience design can be very expensive to fix since the whole of the application development process depends on it. Leaving the very real possibility that you’ve spent your limited development resources creating subsystems and code that don’t properly solve the problems of your end users.

Tradeoffs

Lack of Testing

The main purpose of testing, both automated and manual is to answer the simple question, does your code work? If you can’t answer this question, then you’d be better off heading to the casino rather than launching your application.

In a lot of ways, “lack of testing”, can be viewed through the lens of understanding what you’re application is capable of.

Tradeoff

Bad Code Readability

This is usually referred to as code smell, and again I like Martin Fowler’s explanation. Bad code readability is easy for a developer to spot, because they simply look at the code and decide if it makes sense to them or not. Therefore it can also be subjective.

Being able to read and understand code is critical to being able to debug and modify it later. This should not be confused with broken functionality, which is a different concept.

In developer circles, there is a somewhat innate bias towards overemphasizing this form of technical debt (the reason being that it is the developer, that will ultimately pay for it). Just remember that you can have “Bad Code” that doesn’t smell (silent but deadly).

Tradeoffs

All applications will have some level of technical debt in each of the above categories, but again, it is in managing this debt that gives a development team the ability to successfully launch their application.

HTML5 Video: Transcoding with Node.js and AWS

I recently worked on a video project, and I had a chance to use the relatively new AWS Elastic Transcoder with Node.js. Along the way, I learned a lot about video on the web, and I thought I’d share some of my experiences.

Current State of Video on the Web

The current state of video on the web is a bit confusing, so here goes my best explanation of it.

Before HTML5, the most prominent technology used for video on the Web was Flash. However, because Flash is a proprietary technology, the Web community decided that an open standard was needed. Thus the <video> tag was born.

The good news is that most browser support the <video> tag. Unfortunately, that is where a lot of the cross compatibility stops.

Every video file has a specific video container format that describes how the images, sound, subtitles etc. are laid out in the video file. There are currently three popular video container formats on the Web:

Because video files are so enormous they are almost always compressed. The compression algorithm used is called a video codec. These are the most popular video codecs being used on the Web:

I found this nifty image from here, that helps show the video file layout:

The problem is that there is no standard video container/codec for the web due to disputes over licensing issues, and therefore you have to transcode videos into multiple formats. Fortunately, it seems that you can get pretty widespread browser support with just two codecs/container combinations, mp4/h.264 and webm/vp8.

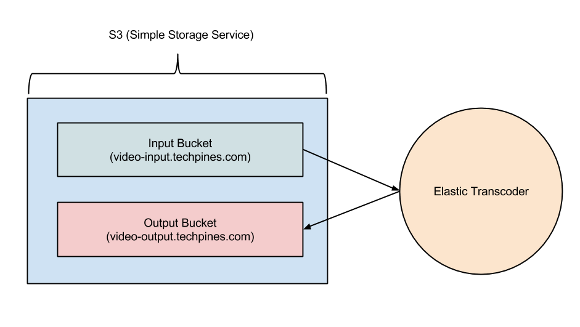

Transcoding with AWS and Node.js

In May of this year, Amazon released an official SDK for Node.js. It’s a superbly written library that has code for almost all of the AWS services.

Also earlier this year, Amazon released Elastic Transcoder. Elastic Transcoder is a pretty slick service that allows you to transcode videos from just about any fomat into one of the standard web video formats above.

The Elastic Transcoder retrieves and processes videos to and from Amazon S3. The architecture looks like this:

Node.js is a great platform to build a standalone video processing server that manages resources between S3 and Elastic Transcoder. Node’s streaming capabilities and non-blocking nature make it a perfect fit for chunking video files to S3 and managing video transcoding.

Here’s a snippet of Node.js code that shows how easy it can be to create a transcoding job with Elastic Transcoder:

elastictranscoder.createJob({

PipelineId: pipelineId, // specifies output/input buckets in S3

OutputKeyPrefix: '/videos'

Input: {

Key: 'my-new-video',

FrameRate: 'auto',

Resolution: 'auto',

AspectRatio: 'auto',

Interlaced: 'auto',

Container: 'auto' },

Output: {

Key: 'my-transcoded-video.mp4',

ThumbnailPattern: 'thumbs-{count}',

PresetId: presetId, // specifies the output video format

Rotate: 'auto' }

},

}, function(error, data) {

// handle callback

});

Video.js: The Open Source HTML5 Video Player

Unfortunately, the HTML5 video tag only gets you about half the way there. There are a lot of missing features between different browsers. Luckily the guys at Brightcove wrote video.js. Which is an awesome library that helps provide cross browser HTML5 video support with fallback support to Flash.

Here is a snippet that shows how things are tied together on the frontend with video.js:

<link href="//vjs.zencdn.net/4.2/video-js.css" rel="stylesheet">

<script src="//vjs.zencdn.net/4.2/video.js"></script>

<video id="example_video" class="video-js vjs-default-skin"

controls preload="auto" width="640" height="264"

data-setup='{"example_option":true}'>

<source src="//video.techpines.com/cats.mp4" type='video/mp4' />

<source src="//video.techpines.com/cats.webm" type='video/webm' />

</video>

I hope that helps with anyone looking to understand video on the Web.

Coffeescript vs. Javascript: Dog eat Dog

I’m happy to say that I’m now an official los techies “techie”! Thanks to Chris Missal and all the other amazingly smart people who let me join the Los Techies crew. Without further ado, this article is going to focus on the pros and cons of javascript vs coffeescript.

The Case against Javascript

Javascript has a bad reputation of being a flaky scripting language, because let’s face it, that’s exactly how it started out. There are a number of let’s say, quirks, in the language that make most developers cringe:

Now those last three are more a reflection of the javascript ecosystem than the language design, but they are worth a mention, because they contribute to javascript’s overall poor repuation.

There’s actually some great stuff about Javascript

While javascript may look gnarly on the outside, there are actually some pretty nice features from a semantic standpoint:

Coffeescript: It’s Better than Javascript right?

A few years ago, coffeescript jumped onto the scene as an alternative to straight-up javascript. (If you want to take a closer peak at the syntax, then I advise you checkout their site, coffeescript.org.)

CoffeeScript is a little language that compiles into JavaScript. Underneath that awkward Java-esque patina, JavaScript has always had a gorgeous heart. CoffeeScript is an attempt to expose the good parts of JavaScript in a simple way.

What a novel idea!? A precompiled language that fixes all of the nastiness and awkwardness of the javascript language.

Well, it turns out that it’s not that simple. Here’s an excellent rant/explanation of why coffeescript is not so great.

The Subjective Argument against Coffeescript

The subjective argument against coffeescript can be summed up pretty easily:

Do you like C-style syntax or Ruby/Python-style syntax?

Coffeescript was designed in the vein of Python and Ruby, so if you hate those languages then you will probably hate coffeescript. As far as programming languages go, I believe that a developer will usually be more productive in the language that they like programming in. This may seem like a no-brainer, but there are quite a few articles out there trying to argue the objective truth of and vs &&.

The Hard Objective Arguments against Coffeescript

Here are what I consider the most pertinent arguments against coffeescript.

How to Decide which language for your Project

I’ve worked on large projects in both javascript and coffeescript, and guess what? You can build an application in either one, and your decision of javascript or coffeescript is not going to make or break you.

If you are working on a project by yourself, then I would pick whichever language you prefer working in. It’s totally subjective, but you are going to be more productive coding in a language that you like coding in vs. one that you hate coding in.

Now, for the tricky part. What if you’re working on a team?

Coffeescript ate my Homework

This is where coffeescript can be a huge problem. If you are working with a tight group of skilled engineers, and you decide to work in coffeescript, and everyone is on board, then my opinion is that you might very well end up being more productive. Especially with programmers that have a Ruby or Python background.

However, for projects that have a revolving team of contractors or that have junior developers that hardly understand the semantics of javascript to begin with, then I would say watch out!

This may seem paradoxical, but I think part of the problem comes back to the debugging cycle. Debugging is a critical part of learning a new language, and for someone with a good javascript background, the coffeescript debugging problem shouldn’t be that much of an issue. However, for a noob, it can be a killer. They don’t grasp if their mistakes are part of coffeescript or javascript which leads to major time sinks on very mundane syntax errors.

In short… it depends 🙂

subscribe via RSS