How we got rid of the database–part 2

A quick introductory sample – continued

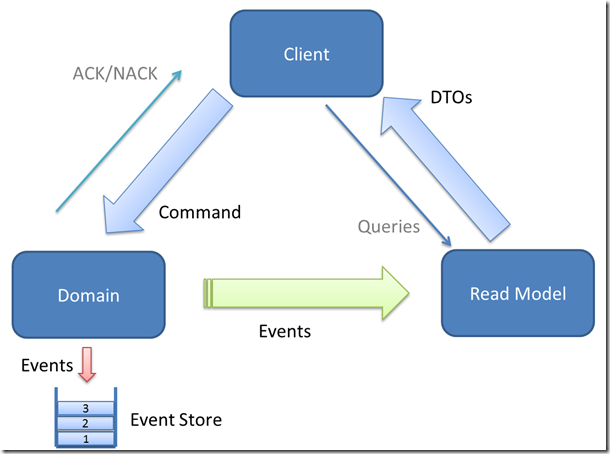

In part one of this series I started to explain what we do, when e.g. a user (in the particular case a principal investigator) wants to schedule a new task. In this case a command is sent from the client (the user interface of the application) to the domain. The command is handled by an aggregate that is part of the domain. The aggregate first checks whether the command violates any invariants. If not the aggregate converts the command into an event and forwards this event to an external observer. The observer is injected into the aggregate by the infrastructure during its creation. The observer accepts the event and stores it in the so called event store.

It is important to realize, that once the event is stored we can commit the transaction and we’re done. What(?) – can I hear you say now; don’t we have to save the aggregate too?

No, there is no need to save (the current state of) the aggregate; saving the event is enough. Since we store all events that an aggregate ever raises over time we have the full history at hand about what exactly happened to the aggregate. Whenever we want to know the current state of an aggregate we can just re-create the aggregate from scratch and re-apply all events that we have stored for this particular instance.

We can extend the diagram that I first presented in my last post as follows

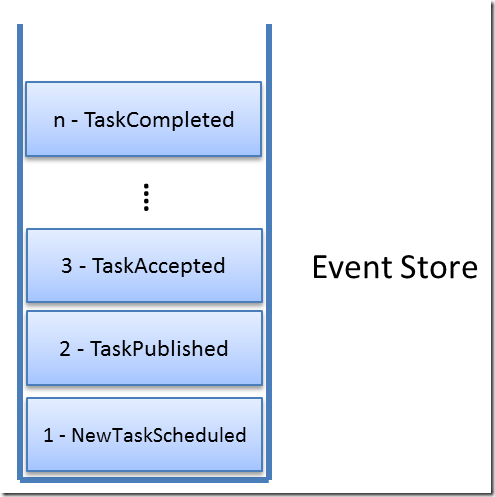

If we now look at just the events of one single task instance we would have something like this

If we create a new task aggregate and apply all the n events that we find in the event store then the result is a task aggregate that has been completed.

It is important to know that new events are always only appended to the stream of existing events. Never do we delete or modify an already existing event! This is equivalent to the transaction journal of an accountant. The accountant always only adds new journal entries. He never modifies an existing one nor does he ever delete a previous entry.

But how do we store these events? There are various possibilities, we could serialize the event and store it in a column of type BLOB (binary large object) or CLOB (character large object) of a table in a relational database. We would also need to have a column in this table where we can store the ID of the aggregate.

We could as well store the (serialized) event in a document database or in a key value store (e.g. Hashtable).

But wait a second…, if we serialize the event why can we not just store it directly in the file system? Why do we need such a thing as a database which only adds complexity to the system (it is always funny to point out that a database ultimately stores the data in the file system too).

Let’s then define the boundary conditions:

- we serialize the events using Google protocol buffer format

- we create one file per aggregate instance; this file contains all events of the particular instance

- any new incoming event is always appended at the end of the corresponding file

Advantages

- Google protocol buffer

- produces very compact output

- is one of the fastest ways to serialize an object (-graph)

-

is relatively tolerant to changes in the event signature (we can change names of properties of add new properties without producing version conflicts)

- since we usually only operate onto a single aggregate per transaction we need only one read operation to get all events to re-construct the corresponding aggregate

- append operations to a file are fast and simple

Disadvantages

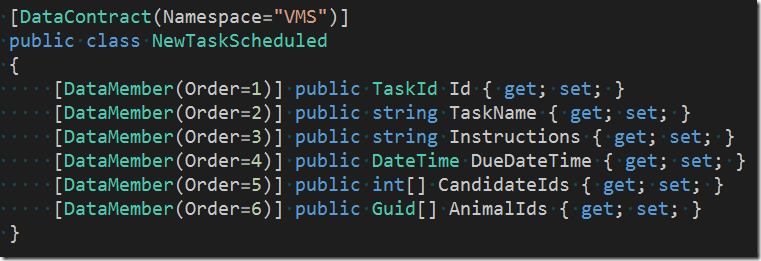

- we have to decorate our events with attributes to make them serializable using Google protocol buffer

If we are using protbuf-net library, which is available as a nuget package, to serialize our events then we can use the standard DataContractAttribute and DataMemberAttribute to decorate our events

Note that the order of the properties and there data type is important but not their name.

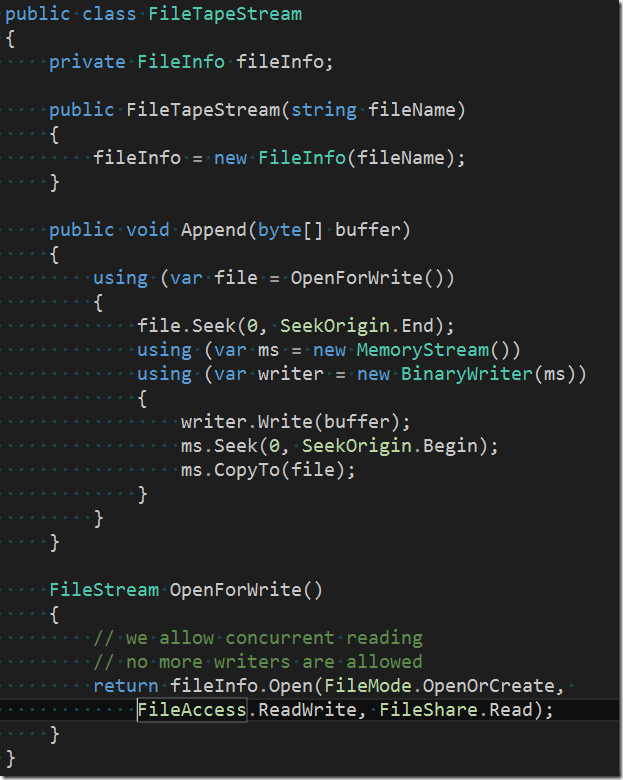

Assuming we have our event serialized and it is available as an array of bytes we can use the following code to append it to an existing file

The code is a simplified version of the productive code we use in our application. What is missing is the code that also stores the length of the data buffer to append as well as its version. Whilst incomplete the above code snippet nevertheless shows that there is no magic needed and certainly no expensive database required to save (serialized) events to the file system.

Since we did not want to reinvent the wheel we chose to use the Lokad.CQRS “framework”. If you are interested in the full code just browse through the FileTapeStream class.

Loading the stream of events from the very same file is equally “easy” and the implementation can be found in the same class.

In my next post I will talk about how we can query the data. Stay tuned…