- Staying up to date with the latest build

- Command line friendly

- Generating nuspec files

- I didn’t have to change any of my markup or write any javascript

- I didn’t have to write an endpoint to serve up the JSON data for the jquery plugin

- A simple FI/DSL ala FluentValidation

- Attribute markers for rules

- Mapping NHibernate configuration into validation (e.g., required)</ul> One of the built in sources adapts the IValidationRule interface (which operates at the class level) into a separate configuration of field validation rules. The Field Validation configuration is similar and has its own IFieldValidationSource to aggregate rules. To top it all of, the ValidationGraph (the semantic model) essentially memoizes the various paths to validation for optimization purposes.

Naturally, this amount of flexibility makes the API…well, let’s just say it started to really suck.

First Iteration: The “Basic” pattern

One of the things we typically do for situations like this is expose a “Basic” static method on an implementation that composes the basic setup for a class. In addition to the static builders, the constructors of the various implementations add the default configuration.

This let’s us do something like:

The Scenario Pattern

This pattern originated from FubuCore’s model binding and it’s been valuable ever since. The idea is that you have a static builder that exposes a way to configure the composition of a particular component. Here’s an example of the ValidationScenario:

The lambda allows you to specify field rules, validation sources, inject services, etc. It’s all located in the core FubuValidation library. This ensures that the composition stays up to date but minimizes any impact on YOU when you’re writing tests for your custom rules.

Wrapping Up

“Investing in your tests” means exactly what it sounds like. Effort should be spent removing complexity, minimizing friction, and making sure that your tests are not brittle. Sometimes that means exposing helper methods on your APIs and other times it means building entirely different blocks of code to bootstrap tests.

- Attribute markers for rules

- Data setup should be declarative

- Data setup should be as easy as you can possibly make it

- The inputs for your test belong with your test (it lives inside of it)

- Use default values whenever we can (e.g., birthdate will always be 03/11/1979 unless otherwise specified)

- Use string conversion techniques to build up more complex objects

- Using the sample database

- Open the grid screen

- Click on 2nd row

- There should be an error on the screen

- Test input (system state)

- Behavior

- Expected results

- Data setup must be declarative (think tabular inputs)

- Data setup must be as easy as possible

- The inputs and dependencies of the test live within the expression (see the ST screenshot above for an example)

- Rapid feedback cycle (Jeremy covered this one)

- System state

- Collapsing your application into a single process

- Defining test inputs

- Standardizing your UI mechanics

- Separating test expression from screen drivers

- Modeling steps for reuse

- Providing contextual information about failures

- Dealing with AJAX

- Utilizing White-box testing for cheaper tests

Back in the saddle

You know how when you haven’t blogged in a long time, you completely lose perspective on just HOW LONG it’s actually been? I pulled up my posts to check a while ago and realized it had been TWO YEARS since I last updated. I think it’s time to break radio silence.

Warning: this is a reflective post more than it is a technical post.

Where I’ve been

I went heads down after my last post vigorously working on the Fubu ecosystem. At the close of 2013, I embarked on a new journey. I took a break from essentially ALL open source contributions, blogging, and twitter. I rejoined the “mainstream” .NET world for a while and did a lot more work in various other platforms (NodeJS primarily). I worked on several SPAs and gained a much different perspective on everything else I had been missing.

I spent a lot of time last year rethinking old habits and philosophies on testing; most of them held up. I also spent a lot of time doing things much differently than I prefer to do them. I have a newfound appreciation and passion for testing that I think I had lost at some point.

Back in the saddle

A few months ago, I rejoined the team at Dovetail. I’ve been quietly catching up on the latest work within the Fubu ecosystem, meeting with Jeremy about his latest masterpiece(s), and finding my fit again. I’m sure blogging is part of that. I also fully plan to become active within the OSS community once more. I don’t know what that looks like exactly yet but I will be part of the next Fubu release to some capacity.

Note: We did just have a new baby so my availability is limited

TL;DR: I’m still alive and will be much more vocal soon.

FubuValidation: Have validation your way

I’m happy to announce that another member of the Fubu family of projects has been documented. The project of the day is: FubuValidation. As usual, the docs go into far greater detail than I will here but I’ll provide some highlights.

Overview

FubuValidation is a member of the Fubu-family of frameworks — frameworks that aim to get out of your way by providing rich semantic models with powerful convention-driven operations. It aims to provide a convention-driven approach to validation while supporting more traditional approaches when needed.

An important thing to note: FubuValidation is NOT coupled to FubuMVC. In fact, it maintains no references to the project.

What’s different about it?

Inspired by the modularity patterns used in the Fubu ecosystem (and previous work with validation), FubuValidation utilizes the concept of an “IValidationSource”. That is, a source of rules for any particular class.

Any number of IValidationSource implementations can be registered and they can pull rules anywhere from attributes to generating them on the fly. You can use the built-in attributes, DSL, or write your own mechanism for creating rules based on NHibernate/EF mappings. The docs have plenty of examples of each.

On top of the extensibility, FubuValidation was designed with diagnostics in mind. While they are currently only surfaced in FubuMVC, the data structures used to query and report on validation rules are defined in FubuValidation. At any point you can query the ValidationGraph and find out not only what rules apply to your class but WHY they apply.

Read the docs

Enough from me. Checkout the new documentation and let us know what you think.

ripple: Fubu-inspired dependency management

I’m happy to announce that our ripple project is now publicly available and it’s sporting some brand new documentation. The docs go into greater detail than I’m going to write here but I’ll provide some highlights:

Overview:

Ripple is a new kind of package manager that was created out of heavy usage of the standard NuGet client. The feeds, the protocol, and the packages are the same. Ripple just embodies differing opinions and provides a new way of consuming them that is friendlier for continuous integration.

Features:

Ripple introduces the concept of “Fixed” vs. “Float” dependencies. For internal dependencies, it’s often beneficial to keep all of your downstream projects built against the very latest of your internal libraries. For the Fubu team, this means that changes to FubuMVC.Core “ripple” into downstream projects and help us find bugs FAST.

Ripple is 100% command line and has no ties into Visual Studio. The usages were designed for how the Fubu team works and for integration with our build server.

Keeping your packages up to date with versions can be a challenge when you have a lot of them. Ripple provides the ability to automatically generate version constraints for the dependencies in your nuspec files so that you never get out of sync.

Getting Started:

Ripple is published both as a Ruby Gem (ripple-cli) and as a NuGet package (Ripple) — which you can use with Chocolatey.

You can read the “Edge” documentation here: [https://darthfubumvc.github.io/ripple

](https://darthfubumvc.github.io/ripple) _

Note: The ripple docs are powered by our brand new “FubuDocs” tooling. Jeremy will likely be writing about that one soon. If he wasn’t planning on it…then I think I just volunteered him for it._

Introducing FubuMVC.AutoComplete

In my previous post, I introduced FubuMVC.Validation and the power of the “drop in Bottles” found in FubuMVC. Today, I’m happy to continue that series by introducing FubuMVC.AutoComplete.

This is a simplistic demonstration but I want to make note of two things:

Introducing FubuMVC.Validation. For real.

As some of you may already know, FubuMVC finally hit 1.0. In response to this milestone, Jeremy and I are hard at work on docs and trying to restrain the urge to write new features until those are done.

Today I’m happy to announce the first installment of screencasts is ready to share with the world. Validation isn’t the most exciting thing to talk about but watch carefully. This illustrates some of the killer features of FubuMVC that you’re going to want to pay attention to in the coming weeks.

Investing in your tests–A lesson in object composition

“Invest in your tests”. I say it all the time and it just never seems to carry the weight that I want it to. This bothers me. It bothers me so much that it’s generally in the back of my mind at any given point of my day. And then it happened…

In my last post, I talked about the joy of cleaning up code that I wrote two years ago. In the process of doing so, I made some observations about object composition and usability that gave me an “ah-ha” moment. I finally have a concrete example for this vague/abstract statement about quality and effort.

So, here it is: investing in your tests – a real life example. But let’s establish some context first.

Validator Configuration

The flexibility in composition of the Validator class in FubuValidation is something that we pushed for since the beginning. We have the concept of an IValidationSource that can provide a collection of rules for a given type. Some examples would be:

Lessons from refactoring two year old code

About two years ago I took my first swing at FubuValidation and FubuMVC.Validation. Jeremy and I have been playing chicken on a cleanup of both of them for a long time now. Thankfully, two years later, I finally found the time/motivation/energy to dive in and take care of some nasty old code. I’ve found it very freeing to dive in and chainsaw the majority of everything I did.

I’ll try my best to keep this specific and not too philosophical but I still wanted to share a few of my observations so far. Here it goes:

1. Clumsy and brittle tests

When you try to adhere to all those crazy principles and maximum flexibility, yada yada…it’s easy to completely miss the mark with regards to usability of your API. Knowing my approach to testing at the time I can safely say that the usability of your API is directly related to the quality of your tests (over time). That is, if your tests are brittle and hard to read…chances are, your usability sucks.

2. Most of the time, lots of code means you’re doing it wrong

There are times when you’re tackling a problem that will result in a ton of code. On the other hand, I’m a student of the school of thought that says “your first idea isn’t necessarily the best one”. I’m not the smartest guy (and I’m quite aware of this) so my first ideas are usually pretty…well, they’re usually trash. It takes a few iterations for me to simplify it. Without using the API (through vigorous testing), there’s just no way to see how it’s going to end up.

3. Dogfooding is critical

Cliché, but it still counts.

4. YAGNI, YAGNI, YAGNI

I’m horrible at this. I think “Oh, surely someone would want THIS, and THIS, and THAT!”. Pick the simplest possible thing that can work and work it until its done. Make sure your tests can hold up, aren’t terribly brittle, and you can iterate fast. When you get a request or you hit the need yourself (*ahem* dogfooding), then you add it in.

The key here is to never be afraid to reevaluate your design. That’s typically the driving force behind me adding far too much fluff.

Guidelines for Automated Testing: Defining Test Inputs

There are several simple rules to follow when dealing with test setup for automated tests:

Let’s walk through some examples of these rules being applied. As usual, I’m going to use StoryTeller for my examples.

Data setup should be declarative

We use tabular structures to construct our test inputs. It feels a little spreadsheet-like but it’s the most natural way to input a decent sample population.

Let’s consider a sample example of declaring inputs for “People” in a system. For any given person, you will likely need their first/last name and let’s also optionally capture an email address:

| First | Last | |

| Josh | Arnold | josh@arnold.com |

| Olivia | Arnold | olivia@arnold.com |

| Joel | Arnold |

Another important note here: avoid unnecessary setup of data that is unrelated to the test at hand. For example, if lookup values are required for every single test then create a mechanism to automatically create them.

Data setup should be as easy as you can possibly make it

Often times the models that you are constructing aren’t as simple as “first/last/email”. You may have entities with various required inputs, variants, etc. You absolutely do not want to have to go through the ceremony of declaring them in every single test.

We beat this a couple of different ways:

In my current project, we have a grid with 30+ fields to maintain. The input that we specify per test leverages default values for field values so that we don’t have to constantly repeat ourselves. This is particularly useful when creating sample populations large enough to test out paging mechanics.

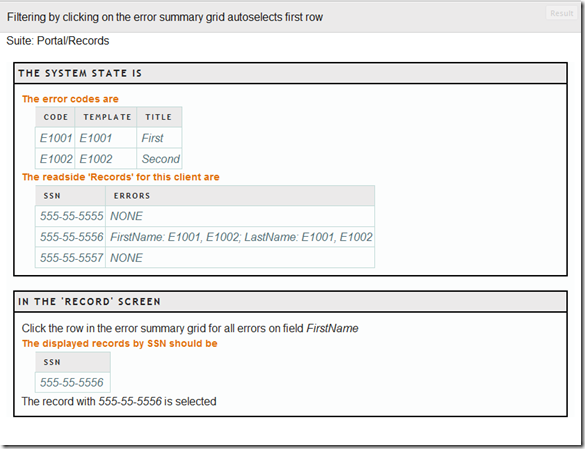

On top of the various fields we must maintain, we have errors that we track per row. Each row can have zero or more errors. Naturally, our test input must be able to support the entry of such errors. Now, rather than creating a separate tabular structure for this, we decided to allow for a particular string syntax that looks something like this:

{field name}: ErrorCode1[, ErrorCode2] {field name}: …

This allows us to input data like:

| First Name | Last Name | Errors |

| Josh | Arnold | FirstName: E100 |

| Olivia | Arnold | NONE |

| Joel | Arnold | NONE |

We can accomplish this in StoryTeller with something like this:

The inputs for your test belong with your test (it lives inside of it)

This is not an attack on the ObjectMother pattern by any means. However, I strongly believe that in automated testing scenarios, the ObjectMother just doesn’t fit. Consider the following acceptance criteria:

There are so many things wrong with this that it’s hard to count. Let’s forget about lack of detail and focus on the real question: “What value does this test have?”

If you’re focusing on defining system behavior, then you’ve failed. This doesn’t describe any behavior at all. If you’re focusing on removing flaws, I think you’ve still failed. You’ve identified a problem but you’ve failed to capture the state of the system associated with it.

Here’s my point: tests are most useful when they self-explanatory. I want to pull open a test and have everything that I need right at my finger tips. I don’t want to cross reference other systems, emails, wiki pages, etc. to figure out what data exists for the test.

A well-defined acceptance test should look like this (it should look familiar):

Using StoryTeller for my examples, here’s what a test looks like (a condensed snippet from my current project):

Wrapping it up

Let me reiterate my points here for sake of clarity. Automated tests that are easy to read, write, and maintain follow these rules:

Next time we’ll discuss how to standardize your UI mechanics.

Guidelines for Automated Testing

Overview

Continuing the theme of my most recent posts, I’ve decided to start a series on Automated Testing. I’ll be pulling from lessons learned on all of the crazy things that I’ve been involved with over the last year.

It’s a known fact that preemptively writing a table of contents means that you will never get around to finishing a series. Don’t worry, I’ve already written and scheduled each post.

Table of Contents

A lesson in automated testing via SlickGrid

Overview

Some time ago I became absolutely obsessed with testing – automated testing to be more specific. While I mostly blame Jeremy Miller for drilling the concepts and values into my skull, I’ve recently started wondering what changed in me. Where was the “ah-ha” moment? And then it finally hit me:

Automated testing (for me) has always involved far too much friction.

My development career has been a continuous mission to remove friction and avoid it at all costs. Rather than striving for quality in the realm of automated testing, I avoided the friction.

Until I learned how to do it “the Fubu way”.

As a result of some reflection over this last year, I’ve settled on one point that I feel is pivotal for frictionless testing.

Invest in your tests

In our last project, my team spent a significant portion of our development efforts working on testing infrastructure. This infrastructure allowed us to accomplish the things we needed to write our tests quickly but it was no small undertaking**. It paid off. Big time. We were able to add complex tests and build up an extremely extensive suite of regression tests.

My point is that if your team requires infrastructure for testing that does not exist, don’t be afraid to invest the time to create it. Believe it or not, there are areas of automated testing that have not yet been explored.

** Of course, the benefit to YOU is that this infrastructure is available to you via our Serenity project

The principle in action

In our current project we are working with SlickGrid. Luckily, we had a bit of infrastructure in place for working with it through Jeremy’s work on FubuMVC.Diagnostics which gave birth to the FubuMVC.SlickGrid project. This let us get up and running fairly quickly with a very basic grid. So we could render a grid from our read model. Great. Now what about testing?

Let me give you a little context here. This isn’t a grid with 4-5 columns. No, we have 72 columns. Each of which are displayed in various contexts (named groups, only columns with errors, etc.). We spiked out a couple of tests with StoryTeller/Serenity but it quickly became apparent that we needed to invest some time for this.

We needed the ability to programmatically point at particular rows in the grid. It wasn’t always as simple as “the row with this ID”, either. Naturally, FubuMVC.SlickGrid.Serenity was born.

The SlickGrid + Serenity project gives you helpers that hang off of the IWebDriver interface. The main “brain” is found in the GridAction

You can access the underlying formatters, editors, and interact with the grid. More importantly, you can do all of that making use of the strongly-typed model that powers the conventions of the grid.

Wrapping up

Too often I see developers bumping into walls with frameworks, tools, and approaches that limit testing. The limits rarely make it impossible to test but the friction involved with the testing serves as a strong demotivator. Testing is a hard discipline to get your team to follow. The last thing you need is to make it needlessly hard and painful.

subscribe via RSS